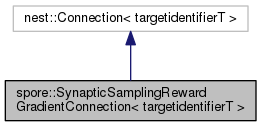

Reward-based synaptic sampling connection class. More...

#include <synaptic_sampling_rewardgradient_connection.h>

Public Types | |

| typedef SynapticSamplingRewardGradientCommonProperties | CommonPropertiesType |

| Type to use for representing common synapse properties. | |

| typedef nest::Connection< targetidentifierT > | ConnectionBase |

| Shortcut for base class. | |

Public Member Functions | |

| SynapticSamplingRewardGradientConnection () | |

| SynapticSamplingRewardGradientConnection (const SynapticSamplingRewardGradientConnection< targetidentifierT > &rhs) | |

| ~SynapticSamplingRewardGradientConnection () | |

| void | check_connection (nest::Node &s, nest::Node &t, nest::rport receptor_type, double t_lastspike, const CommonPropertiesType &cp) |

| void | get_status (DictionaryDatum &d) const |

| void | set_status (const DictionaryDatum &d, nest::ConnectorModel &cm) |

| Status setter function. More... | |

| void | send (nest::Event &e, nest::thread t, double t_lastspike, const CommonPropertiesType &cp) |

| void | check_synapse_params (const DictionaryDatum &syn_spec) const |

Reward-based synaptic sampling connection class.

This connection type implements the reward-based synaptic sampling algorithm introduced in [1,2,3]. The target node to which synapses of this type are connected must be derived from TracingNode. A second node which is also derived from type TracingNode must be registered to the synapse model at its reward_transmitter parameter. The synapse model performs a stochastic policy search which tries to maximize the reward signal provided by the reward_transmitter node. At the same time synaptic weights are constraint by a Gaussian prior with mean  and standard deviation

and standard deviation  . This synapse type can not change its sign, i.e. synapses are either excitatory or inhibitory depending on the sign of the weight_scale parameter. If synaptic weights fall below a threshold (determined by parameter parameter_mapping_offset) weights are clipped to zero (retracted synapses). The synapse model also implements an optional mechanism to automatically remove retracted synapses from the simulation. This mechanism can be turned on using the delete_retracted_synapses parameter.

. This synapse type can not change its sign, i.e. synapses are either excitatory or inhibitory depending on the sign of the weight_scale parameter. If synaptic weights fall below a threshold (determined by parameter parameter_mapping_offset) weights are clipped to zero (retracted synapses). The synapse model also implements an optional mechanism to automatically remove retracted synapses from the simulation. This mechanism can be turned on using the delete_retracted_synapses parameter.

Parameters and state variables

The following parameters can be set in the common properties dictionary (default values and constraints are given in parentheses, corresponding symbols in the equations given below and in references [1,2,3] are given in braces):

| name | type | comment |

|---|---|---|

| learning_rate | double | learning rate (5e-08, ≥0.0) {  } } |

| temperature | double | amplitude of parameter noise (0.1, ≥0.0) {  } } |

| gradient_noise | double | amplitude of gradient noise (0.0, ≥0.0) {  } } |

| psp_tau_rise | double | double exponential PSP kernel rise (2.0, >0.0) [ms] {  } } |

| psp_tau_fall | double | double exponential PSP kernel decay (20.0, >0.0) [ms] {  } } |

| psp_cutoff_amplitude | double | psp is clipped to 0 below this value (0.0001, ≥0.0) {  } } |

| integration_time | double | time of gradient integration (50000.0, >0.0) [ms] {  } } |

| episode_length | double | length of eligibility trace (1000.0, >0.0) [ms] {  } } |

| weight_update_interval | double | interval of synaptic weight updates (100.0, >0.0) [ms] |

| parameter_mapping_offset | double | offset parameter for computing synaptic weight (3.0) {  } } |

| weight_scale | double | scaling factor for the synaptic weight (1.0) {  } } |

| direct_gradient_rate | double | rate of directly applying changes to the synaptic parameter (0.0) {  } } |

| gradient_scale | double | scaling parameter for the gradient (1.0) {  } } |

| max_param | double | maximum synaptic parameter (5.0) |

| min_param | double | minimum synaptic parameter (-2.0) |

| max_param_change | double | maximum synaptic parameter change (40.0, ≥0.0) |

| reward_transmitter | long | GID of the synapse's reward transmitter* |

| bap_trace_id | long | ID of the BAP trace (0, ≥0) |

| dopa_trace_id | long | ID of the dopamine trace (0, ≥0) |

| simulate_retracted_synapses | bool | continue simulating retracted synapses (false) |

| delete_retracted_synapses | bool | delete retracted synapses (false) |

*) reward_transmitter must be set to the GID of a TracingNode before simulation startup.

The following parameters can be set in the status dictionary:

| name | type | comment |

|---|---|---|

| synaptic_parameter | double | current synaptic parameter {  } } |

| weight | double | current synaptic weight {  } } |

| eligibility_trace | double | current eligibility trace {  } } |

| reward_gradient | double | current reward gradient {  } } |

| prior_mean | double | mean of the Gaussian prior {  } } |

| prior_precision | double | precision of the Gaussian prior {  } } |

| recorder_times | [double] | time points of parameter recordings* |

| weight_values | [double] | array of recorded synaptic weight values* |

| synaptic_parameter_values | [double] | array of recorded synaptic parameter values* |

| reward_gradient_values | [double] | array of recorded reward gradient values* |

| eligibility_trace_values | [double] | array of recorded eligibility trace values* |

| psp_values | [double] | array of recorded psp values* |

| recorder_interval | double | interval of synaptic recordings [ms] |

| reset_recorder | bool | clear all recorded values now* (write only) |

*) Recorder fields are read only. If reset_recorder is set to true all recorder fields will be cleared instantaneously.

Implementation Details

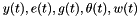

This connection type is a diligent synapse model, therefore updates are triggered on a regular interval which is ensured by the ConnectionUpdateManager. The state of each synapse consists of the variables  . The variable

. The variable  is the presynaptic spike train filtered with a PSP kernel

is the presynaptic spike train filtered with a PSP kernel  of the form

of the form

![\[ \epsilon(t) \;=\; \frac{\tau_r}{\tau_m - \tau_r}\left( e^{-\frac{1}{\tau_m}} - e^{-\frac{1}{\tau_r}} \right)\;. \hspace{24px} (1) \]](form_75.png)

A node derived from type TracingNode must be registered to the synapse model at its reward_transmitter parameter. The trace of this node at id dopa_trace_id is used as reward signal  . The trace of the postsynaptic neuron with id bap_trace_id is used as back-propagating signal

. The trace of the postsynaptic neuron with id bap_trace_id is used as back-propagating signal  . The synapse then solves the following set of differential equations:

. The synapse then solves the following set of differential equations:

![\[ \frac{d e(t)}{dt} \;=\; -\frac{1}{\tau_e} e(t) \,+\, w(t)\,y(t)\,bap(t) \hspace{24px} (2) \]](form_77.png)

![\[ \frac{d g(t)}{dt} \;=\; -\frac{1}{\tau_g} g(t) \,+\, dopa(t)\,e(t) \,+\, T_g\,d \mathcal{W}_g \hspace{24px} (3) \]](form_78.png)

![\[ d \theta(t) \;=\; \beta\,\bigg( c_p (\mu - \theta(t)) + c_g\,g(t) + c_e \, dopa(t) \,e(t) \bigg) dt \,+\, \sqrt{ 2 T_\theta \beta } \, \mathcal{W}_{\theta} \hspace{24px} (4) \]](form_87.png)

![\[ w(t) \;=\; w_0 \, \exp ( \theta(t) - \theta_0 ) \hspace{24px} (5) \]](form_85.png)

The precision of the prior in equation (4) relates to the standard deviation as  . Setting

. Setting  corresponds to a non-informative (flat) prior.

corresponds to a non-informative (flat) prior.

The differential equations (2-5) are solved using Euler integration. The dynamics of the postsynaptic term  , the eligibility trace

, the eligibility trace  and the reward gradient

and the reward gradient  are updated at each NEST time step. The dynamics of

are updated at each NEST time step. The dynamics of  and

and  are updated on a time grid based on weight_update_interval. The synaptic weights remain constant between two updates. The synapse recorder is only invoked after each weight update which means that recorder_interval must be a multiple of weight_update_interval. Synaptic parameters are clipped at min_param and max_param. Parameter gradients are clipped at +/- max_param_change. Synaptic weights of synapses for which

are updated on a time grid based on weight_update_interval. The synaptic weights remain constant between two updates. The synapse recorder is only invoked after each weight update which means that recorder_interval must be a multiple of weight_update_interval. Synaptic parameters are clipped at min_param and max_param. Parameter gradients are clipped at +/- max_param_change. Synaptic weights of synapses for which  falls below 0 are clipped to 0 (retracted synapses). If simulate_retracted_synapses is set to

falls below 0 are clipped to 0 (retracted synapses). If simulate_retracted_synapses is set to false simulation of  and

and  is not continued for retracted synapse. This means that only the stochastic dynamics of

is not continued for retracted synapse. This means that only the stochastic dynamics of  are simulated until the synapse is reformed again. During this time, the reward gradient

are simulated until the synapse is reformed again. During this time, the reward gradient  is fixed to 0. If delete_retracted_synapses is set to

is fixed to 0. If delete_retracted_synapses is set to true, retracted synapses will be removed from the network using the garbage collector of the ConnectionUpdateManager.

References

[1] David Kappel, Robert Legenstein, Stefan Habenschuss, Michael Hsieh and Wolfgang Maass. A Dynamic Connectome Supports the Emergence of Stable Computational Function of Neural Circuits through Reward-Based Learning. eNeuro, 2018. https://doi.org/10.1523/ENEURO.0301-17.2018

[2] David Kappel, Robert Legenstein, Stefan Habenschuss, Michael Hsieh and Wolfgang Maass. Reward-based self-configuration of neural circuits. 2017. https://arxiv.org/abs/1704.04238

[3] Zhaofei Yu, David Kappel, Robert Legenstein, Sen Song, Feng Chen and Wolfgang Maass. CaMKII activation supports reward-based neural network optimization through Hamiltonian sampling. 2016. https://arxiv.org/abs/1606.00157

| spore::SynapticSamplingRewardGradientConnection< targetidentifierT >::SynapticSamplingRewardGradientConnection | ( | ) |

Default Constructor.

| spore::SynapticSamplingRewardGradientConnection< targetidentifierT >::SynapticSamplingRewardGradientConnection | ( | const SynapticSamplingRewardGradientConnection< targetidentifierT > & | rhs | ) |

Copy Constructor.

| spore::SynapticSamplingRewardGradientConnection< targetidentifierT >::~SynapticSamplingRewardGradientConnection | ( | ) |

Destructor.

|

inline |

Checks if the type of the postsynaptic node is supported. Throws an IllegalConnection exception if the postsynaptic node is not derived from TracingNode.

| void spore::SynapticSamplingRewardGradientConnection< targetidentifierT >::check_synapse_params | ( | const DictionaryDatum & | syn_spec | ) | const |

Check syn_spec dictionary for parameters that are not allowed for this connection. Will issue warning or throw error if a parameter is found.

| void spore::SynapticSamplingRewardGradientConnection< targetidentifierT >::get_status | ( | DictionaryDatum & | d | ) | const |

Status getter function.

| void spore::SynapticSamplingRewardGradientConnection< targetidentifierT >::send | ( | nest::Event & | e, |

| nest::thread | thread, | ||

| double | t_last_spike, | ||

| const CommonPropertiesType & | cp | ||

| ) |

Send an event to the postsynaptic neuron. This will update the synapse state and synaptic weights to the current slice origin and send the spike event. This method is also triggered by the ConnectionUpdateManager to indicate that the synapse is running out of date. In this case an invalid rport of -1 is passed and the spike is not delivered to the postsynaptic neuron.

| e | the spike event. |

| thread | the id of the connections thread. |

| t_last_spike | the time of the last spike. |

| cp | the synapse type common properties. |

| void spore::SynapticSamplingRewardGradientConnection< targetidentifierT >::set_status | ( | const DictionaryDatum & | d, |

| nest::ConnectorModel & | cm | ||

| ) |

Status setter function.

1.8.13

1.8.13